AR comes to Google search

Without a question, the biggest news to emerge from the Google I/O 2019 event is the Pixel 3a and Pixel 3a XL smartphones. With that said, Google’s has made a bevy of announcements that were equally deserving of the spotlight.

Google opened I/O 2019 by announcing augmented reality models for Google search results. Users can rotate these models and view supported animations to see how they move. If the device supports Google AR, the models can also be projected onto a real-world surface. During the presentation, Google demonstrated an AR model of an arm’s muscle structure and a rotatable shoe to represent its vision for a richer online shopping experience.

Better Google Lens

Google Lens, Google’s object and image recognition feature, has already accumulated over 1 billion uses worldwide. Its relationship with its users is symbiotic; users get to better understand what they’re looking at, and the image data helps Google to index the world. Later this month, Google Lens will able to help you pick out what to eat from a menu by highlighting the most popular items through a quick scan. The highlights are interactive, too, as users will be able to tap on them to summon more context on the particular dish. In addition, it will be able to calculate how much to tip and split the bill by scanning the receipt. Google is also partnering up with magazines and bookmakers to bring animated recipes through scanning the page.

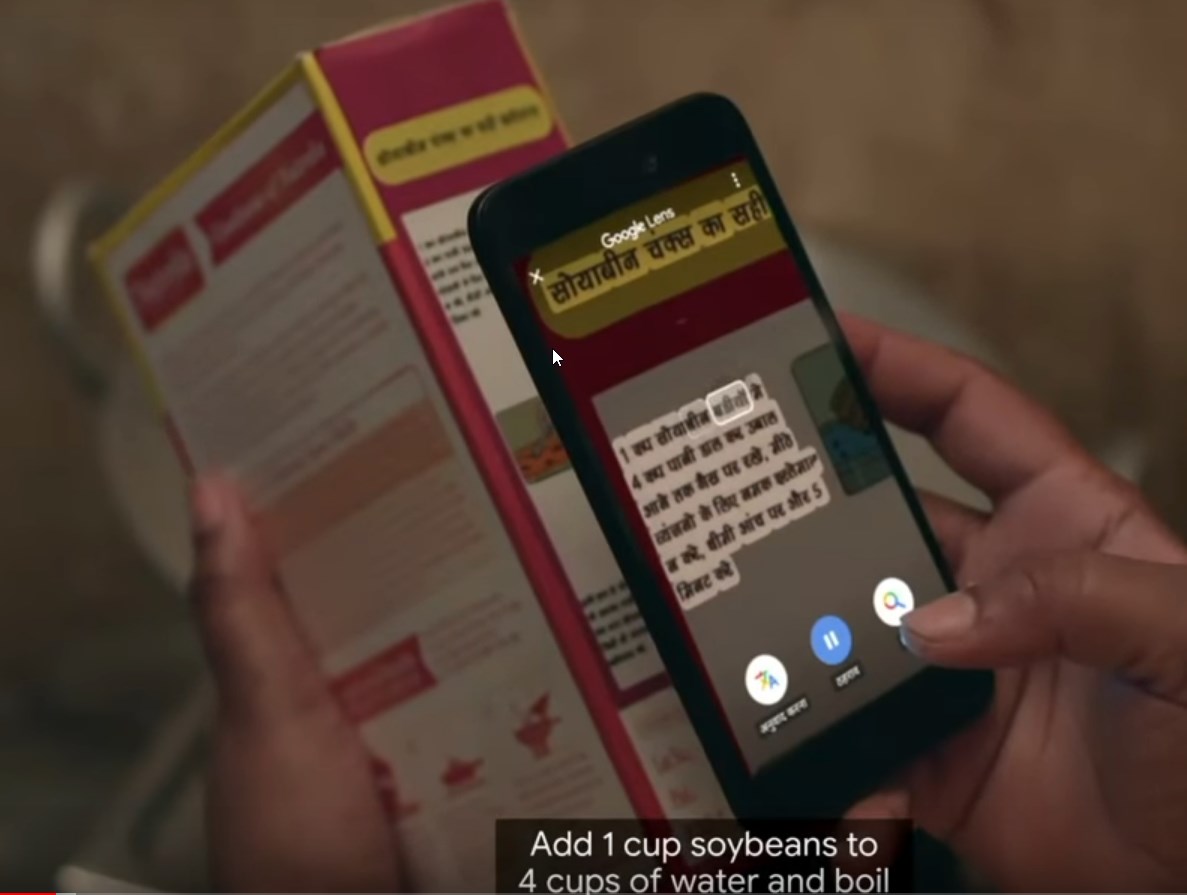

Google Go gets text-to-speech

Google’s Go AI search app is designed for the bottommost budget phones (think the in ranges of $35). Targeting users of these devices who have low literacy, Google is adding on-the-fly translation and text-to-voice capabilities to Google Go. When the user scans an image of the text, Google Go provides an option to read them out aloud and highlights the words as it progresses. Even more impressive, Google was somehow able to compress the voice recognition data in a dozen languages to just 100KB.

Duplex for the web

Google is also looking to integrate Google Duplex into the web. Duplex was first announced at Google I/O 2018 as an appointment-booking feature that mimics human interactions. Currently, Duplex on the web can only perform a small set of actions. Google CEO Sundar Pichai demonstrated how Duplex was able to help a user book car rental for an upcoming trip based on his previous car preferences and date of travel. This feature will arrive “later this year”.

Supercharged Google Assistant

The most overhauled item on this list is one that’s familiar to many. At Google I/O 2019, Pichai announced a few functional as well as operational changes to the Google Assistant.

Google uses three AI models to process speech. Together, they total to 100GB of data. Privacy concerns aside, a major downside to this is, of course, the need for an internet connection. Through some wizardry, Google has somehow managed to compress the 100GB models into just 500MB — small enough to be installed on smartphones locally. Considering the commodious phone storage we have today, 500MB is more than affordable. Furthermore, Google will be deploying a local version of its speech recognition AI to Android smartphones, compartmentalizing speech recognition to the user’s device.

Eventually, you’ll be able to queue multiple commands through Assistant instead of prefixing every one with “hey Google”. Assistant will also be making texts conversations like text and emails more fluent; users will be able to dictate through their phones to reply to texts, compose emails, add attachments, and perform more in-depth searches. Assistant will also become content aware and format emails according to user’s intents.

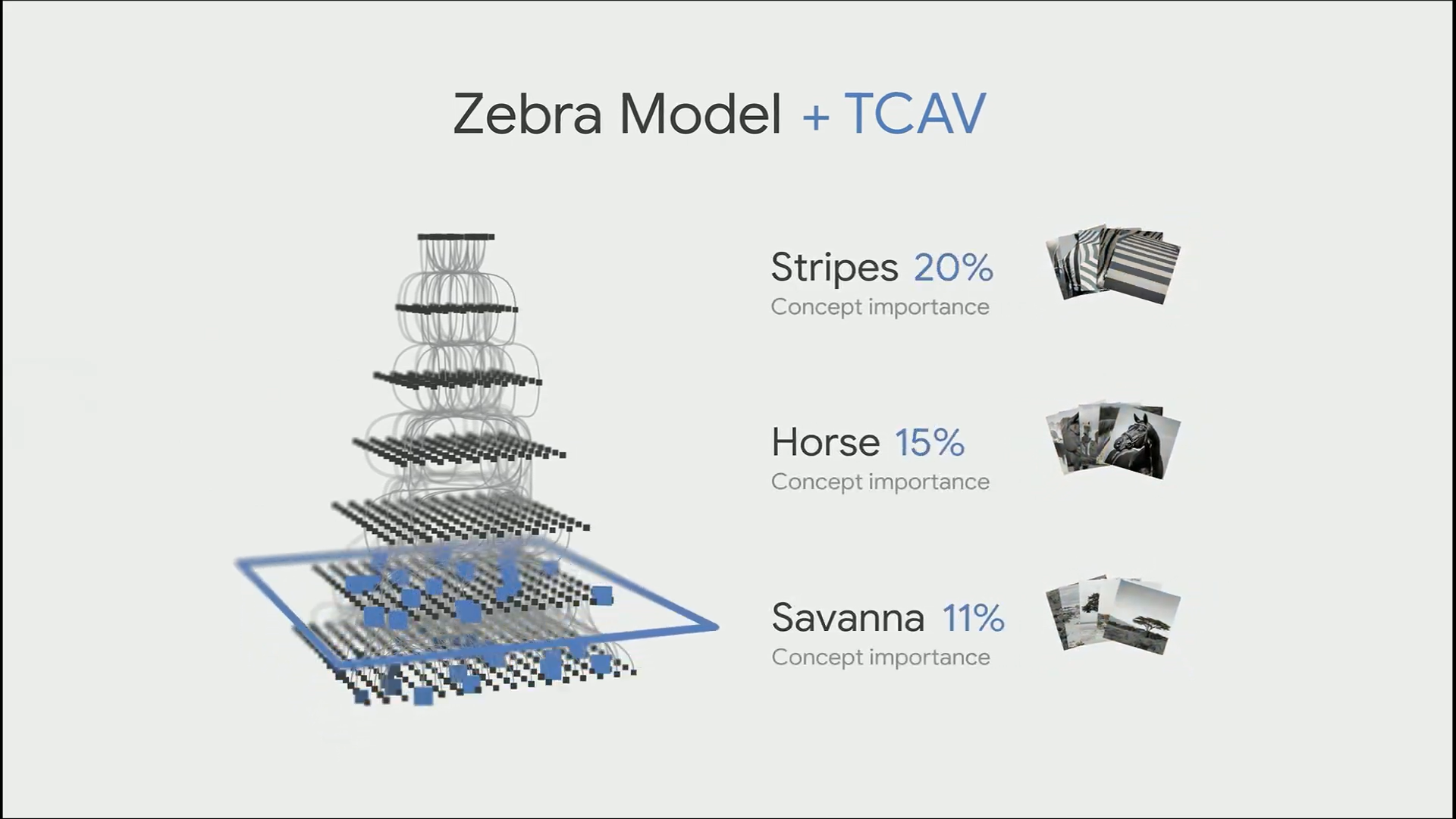

More transparent AI

A challenge for today’s AIs is how they account bias. Because of their complex design and autonomous operation, researchers can’t always tell how exactly the AI is weighing every factor. Google’s solution is TCAV, which stands for testing with concept activation vectors. As opposed to evaluating characteristics based on low-level concepts, CAVs will weigh signals based on the objective of the model. This helps to provide insight into how AI models balance variables like gender, age, and race.

Live Caption and Live Relay

Live caption and Live Relay are two features designed to increase accessibility. They work in tandem with the aforementioned local AI features to automatically transcribe videos and read texts back aloud to users from captured images. This benefits more than people with hearing impairments; the rest of us can now watch videos in public without audio.

Additionally, Google has launched Project Euphonia for people who stutter, have had strokes, or have experienced any life events that prevent them from speaking normally. Their voice data are not normally used for speech training recognition, and therefore are hard for Google’s general speech AI to recognize. Project Euphonia aims to solve that by attacking the issue with an AI model that’s trained specifically using these discarded voice snippets. Project Euphonia reaches beyond voice, it can also be trained to recognize facial expressions and predict what word the user is trying to form.

Android Q

As we inch closer to its August launch date, Google disclosed more features we can expect it to have.

Android Q will have better foldable phone support. It was shown on-stage that it can seamlessly transition between folded and unfolded modes without having to relaunch an app. It will also bring floating chat bubbles similar to Facebook Messenger’s chat heads. And once again, Google would like to emphasize the improved local AI features that will come with Android Q. Saving the best for last, system-wide dark mode will finally be arriving with Android Q. Those who are impatient can enroll in the Android Q beta testing program right now to see what the Dark theme looks like.

Google Nest Hub Max

The Google Nest Hub Max expands on the Google Nest Hub smart display family, sporting a larger screen and comes with a front camera. Its 10” display is mounted on a speaker stand that’s compatible with the Google assistant. Once it syncs up with your Google account, it can display pictures stored on Google Photos and play videos. The front camera supports motion sensing for controlling media playback and stopping alarms. The Google Nest Hub Max is available now for US$229.