Calculating the potential return on a new server or application is relatively straightforward: If the purchase enables an organization to do more in less time, profits are likely to go up. That makes a convincing case. But in the absence of obvious benefits or immediate gains, the computation for backup and recovery is not as easy.

Quantifying the return on an investment in backup and recovery involves estimating the financial impact of worst-case future scenarios: effectively, thinking the unthinkable and figuring out the potential cost of doing nothing about it. As a result, a backup and recovery solution often is justified only as part of a project — such as an application migration or a data center upgrade — with clearly definable financial benefits. However, over time, that approach can lead to a mix of legacy platforms and application-specific solutions, each with its own dependencies, backup windows, recovery times, support requirements and so on.

To help organizations evaluate the cost-benefits of a modern, integrated backup and recovery solution, IT research organization Computing Research surveyed 120 data center professionals in the United Kingdom.1 The online survey was designed to find out how those charged with keeping enterprise IT systems operational quantify risk and, more importantly, how they use that information to justify spending on backup and recovery.

No one expects a hedgehog in the air conditioning

Ask for a list of potential disasters that might affect enterprise IT systems, and flood and fire spring to mind. Disasters, however, don’t just follow in the wake of cataclysmic events such as earthquakes and typhoons. Software bugs, malware and hackers can be just as damaging.

But quite surprising are the seemingly inconsequential or unforeseeable actions that nonetheless can have a big impact on data loss or downtime. Take, for example, a few anecdotes related by survey respondents:

- A contractor accidentally leaned on the kill switch, turning off an entire data center in moments.

- An operator became sick over a rack of switches as a result of finding a decomposing and very smelly hedgehog in the air conditioning.

- One company prudently invested in a dedicated business resiliency center, but when a nearby electricity substation exploded, the center turned out to be inside the area cordoned off by the police, preventing its use.

- When trying to recover a crucial storage array, another company found that although tapes had been loaded nightly into the backup library as scheduled, the backup routine itself had been discontinued.

Nearly all respondents had an interesting anecdote to tell, indicating that they were mindful of potential threats to IT systems. Most respondents also were aware of the inadequacies of legacy backup and recovery systems to protect against such threats, leading 43 percent to adopt integrated systems that are designed to protect their platforms and applications across both physical and virtualized infrastructures.

That’s good news, but on the flip side it reveals more than half of survey respondents are less well prepared; many report struggling with legacy products that are poorly equipped or unable to handle the latest technologies. In this respect, just under one-fifth said they relied on recovering whole servers rather than bringing individual applications back online should problems arise. This approach could be one reason why complex and slow recovery procedures rose to the top of the list when respondents who use a mix of legacy products were asked to highlight their backup and recovery issues.

Slow recovery times: Bane or boon?

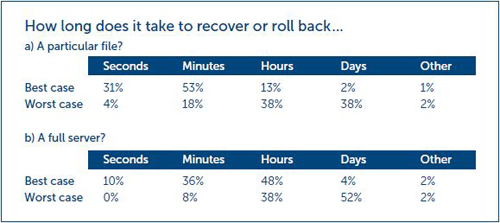

When asked specifically about how quickly they thought they could recover from a disaster, respondents supplied answers that belied the general impression of their organizations competing in fast-moving, always-on, global marketplaces in which every second counts.

One might expect most enterprises to be able to recover lost data and even entire servers in seconds or at least minutes. However, that expectation is widely optimistic for the many respondents who thought it could take days, at worst, to recover lost data or a full server (see figure).

Given the breadth and consistency of the answers, slow recovery times appear to be the bane of IT departments across organizations of all types and sizes. But consider this: Those very same lengthy recovery estimates can be used to quantify the financial impact of not investing in solutions to address the issue.

The longer it takes to recover a server or application, the more business may be lost. The cost of that lost business can, at the very least, be estimated and used when bidding for the purchase of new backup and recovery solutions or when updating an existing setup to cope with technological and business changes.

In the pressure cooker

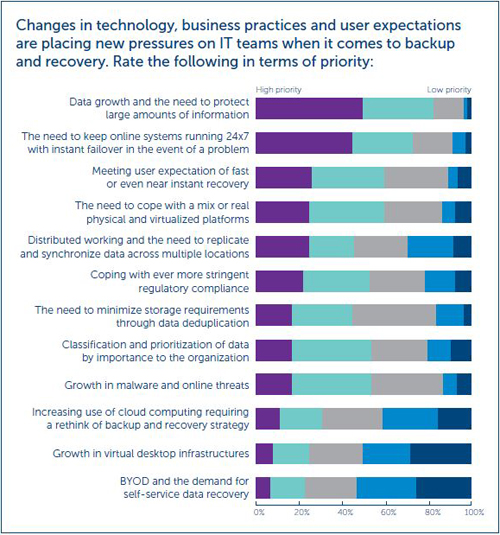

One reason why IT decision makers may not have used recovery times to indicate lost business costs could be the sheer volume of data, applications and host systems that must be protected these days. The survey asked respondents to rate a number of pressures they faced from changes in technology, business practices and user expectations. Data growth and the need to protect large amounts of information came in as the highest priority (see figure).

Given these complex challenges, IT decision makers may find it hard to justify a comprehensive approach to backup and recovery that is designed to protect all IT resources within their organization. Such a business resiliency solution may still be viewed as prohibitively expensive, even when measured against the cost of losing business for days.

A class act to help direct investments

What more can be done? When assessing the impact on business resiliency planning, many enterprises simply don’t see initiatives such as bring your own device (BYOD) or even cloud computing as major concerns. Rather, they must cope with the same issues of storage growth, compliance and security that have plagued them for decades.

So instead of trying to protect everything in the same way, why not identify the resources — such as servers, applications and data — that would cause the most operational harm should they become unavailable for any length of time? The simple truth is that not all IT systems or data are of equal importance to the enterprise. Mission-critical systems must be recovered in seconds to keep the business running, whereas servers in occasional use could take much longer to recover without affecting operations.

By classifying resources by importance, IT managers can concentrate backup and recovery investments to make sure priority resources are protected. In addition, they can build adequate redundancy into host platforms, speed up network connections and increase wide area network (WAN) bandwidth. These actions help keep business-critical systems running and improve the ability to replicate backed-up data as added insurance against disaster. And they can choose backup and recovery products expressly designed to handle the mix of platforms and applications deployed — helping ensure that, should the worst happen, business-critical resources can be recovered quickly with minimal impact to the bottom line.

Although classification of data by importance to the business seems to be a compelling best practice, only 68 percent of survey respondents indicated doing so. Those who did, however, ranked quicker recovery as the main advantage of this considered approach to business resiliency planning, ahead of reduced storage overhead when taking backups and reduced backup costs.

Match backup and recovery to business priorities

Because recovery requirements should be tied to the value of enterprise systems and data, Dell offers a comprehensive portfolio of field-tested solutions that help organizations back up and recover resources based on specific protection requirements.

Organizations of all sizes can build optimized data protection environments quickly and easily, without changing their overall data protection strategy, by deploying products such as Dell AppAssure backup, replication and recovery software; Dell NetVault Backup cross-platform backup and recovery software; and the Dell DR and Dell DL families of purpose-built backup and recovery appliances. This product portfolio allows IT decision makers to match the software and hardware systems within a business resiliency strategy to application- or system-specific recovery time objectives and recovery point objectives. The result? Assurance that the portfolio enables critical data to be restored in seconds and IT infrastructure in minutes.

Adopting an integrated approach enables IT decision makers to align backup and recovery solutions with strategic business priorities — while also helping to cut costs, reduce complexity and minimize data loss. “When it comes to protecting your business, data recovery is critical to resiliency,” says Srinidhi Varadarajan of Dell. “Rather than treating each system/application backup process separately, think about their relative impact on the business when evaluating what set of tools to use and what to change. Dell’s data protection portfolio has been engineered from the ground up to give you a range of options that accommodate your schedules, budgets and current practices.”

Expecting the unexpected

Equally important to the classification of resources is the need to understand the requirements and limitations of the technologies on which enterprises increasingly rely. For example, virtualization brings many benefits when it comes to hardware consolidation, speed of deployment and operational flexibility. At the same time, it can heighten system vulnerability by enabling multiple virtual machines to be hosted on a single platform.

Backup and recovery solutions should address the needs of virtual as well as physical resources. They also must cope with legacy systems as well as new platforms, be easy to manage and meet the recovery expectations of the organization, its employees and customers. (For more information, see the sidebar, “Match backup and recovery to business priorities.”)

With the right planning for suitable backup and recovery solutions to protect their most important systems, organizations can effectively cope with IT disasters — be they cataclysmic natural events or apparently inconsequential actions that would otherwise take a big toll.